inconsequence

musings on subjects of passing interest

Large Language Models — A Few Truths

LLMs and derivative products (notably ChatGPT) continue to generate a combination of excitement, apprehensions, uncertainty, buzz, and hype. But there are a few things that are becoming increasinfly clear, in the two-and-a-bit years since ChatGPT 3 came out and, basically, aced the Turing Test without (as Turing had pointed out when he called "the Imitation Game") necessarily thinking.

The Imitation Game asks if a machine can imitate the behavior of someone who thinks (whatever that means) by reducing that to once specific behavior, carrying on a conversation.

Just as a Skinnerian behaviorist might suggest that "I can't tell if you're in pain, but you seem to be exhibiting pained behavior", Turing basically said "I can't tell if a machine can think, but I can test whether it seems to exhibit a specific thinking behavior." He's engaging in classic analysis: I can't solve the general problem, but I can identify a simpler more tractable problem and then we can try to solve that. But almost everyone misses the step where Turing said the general problem is worse than difficult—it's ill-defined.

LLMs aren't thinking, or reasoning, or doing anything like that

You can quickly determine that LLMs cannot as a class of object think by looking at how they handle iterative questioning. If you ask an LLM to do something it will often (a) fail to do it (even when it would be extremely easy for it to check whether it had done it) and then (b) tell you it has done exactly what it was asked to do (which shows it's very good at reframing a request as response).

This should be no surprise. The machines are simply manipulating words. They have no understanding of the connection between, say, the process of writing code and seeing the results than they do between Newton's equations of motion and the sensations one feels when sitting on a swing.

So when you say "hey can you write a hello world application for me in Rust?" they can probably do it via analysis of many source texts, some of which quite specifically had to do with that exact task. But they might easily produce code that's 95% correct but doesn't run at all because, not having any real experience of coding they don't "know" that when you write a hello world program you then run it and see if it works, and if it doesn't you fix the code and try again.

They are, however, perfectly capable of reproducing some mad libs version of a response. So, they might tell you they wrote it, tested it, found a bug, fixed it, and here it is. And yet it doesn't work at all.

And that's just simple stuff.

And look, for stuff where "there is no one correct answer" they generally produce stuff that is in some vague way more-or-less OK. If you ask them to read some text and summarize it, they tend to be fairly successful. But they don't exhibit sound judgment in (for example) picking the most important information because, as noted, they have no concept of what any of the words they are using actually mean.

All that said, when a process is essentially textual and there is a huge corpus of training data for that process, such as patient records with medical histories, test results, and diagnoses, LLMs can quickly outperform humans, who lack both the time and inclination to read every patient record and medical paper online.

There is no Moat

The purported value of LLMs and their uses are a chimera. No-one is going to suddenly get rich because "they have an LLM". Companies will go out of business because they don't have an LLM or workflows that use LLMs.

LLMs will doubtless have enormous economic impacts (probably further hollowing out what is left of the Middle Class, since most people have jobs where "there is no one correct answer" and all they have to do is produce stuff that is in some way more-or-less OK… Just consider asking a question of a sales person in an electronics store or a bureaucrat processing an application based on a form and a body of regulations—do you think a human is better than the average AI? Given a panel of test results and patient history, general purpose LLMs already outperform doctors at delivering accurate diagnoses in some studies).

But, UC Berkeley's Sky Computing Lab—UC Berkeley having already reduced the price of operating systems to zero by clean-room-cloning AT&T UNIX and then open-sourcing it all… basically kickstarting the open source movement just released Sky-T1-32B-Preview an open source clone of not just ChatGPT but the entire "reasoning pipeline" (which OpenAI has not open-sourced) that it trained for $451.

So if you just invested, say, $150M training one iteration of your LLM six months ago, the value of that investement has depreciated by about 99.999997%.

And the sky-high valuations of nVidia are predicted mainly on the costs of training models, not running them. People don't need $1B render farms to run these models. Very good versions of them can run on cell phones, and the full versions can run on suitably beefed up laptops, which is to day, next year's consumer laptops.

I should add that while Sky-T1-32B-Preview allegedly outperformed ChatGPT-o1 on a battery of tests, I played with a 10GB quantized version on my laptop just now, and it produced far worse results, far more slowly than Meta Llama 7B.

— Tonio Loewald, 1/17/2025

Recent Posts

What should a front-end framework do?

6/3/2025

This article introduces xinjs, a highly opinionated front-end framework designed to radically simplify complex web and desktop application development. Unlike frameworks that complicate simple tasks or rely on inefficient virtual DOMs, xinjs leverages native Web Components, direct DOM manipulation, and a unique proxy-based state management system to minimize boilerplate, boost performance, and enhance maintainability, allowing developers to achieve more with less code.

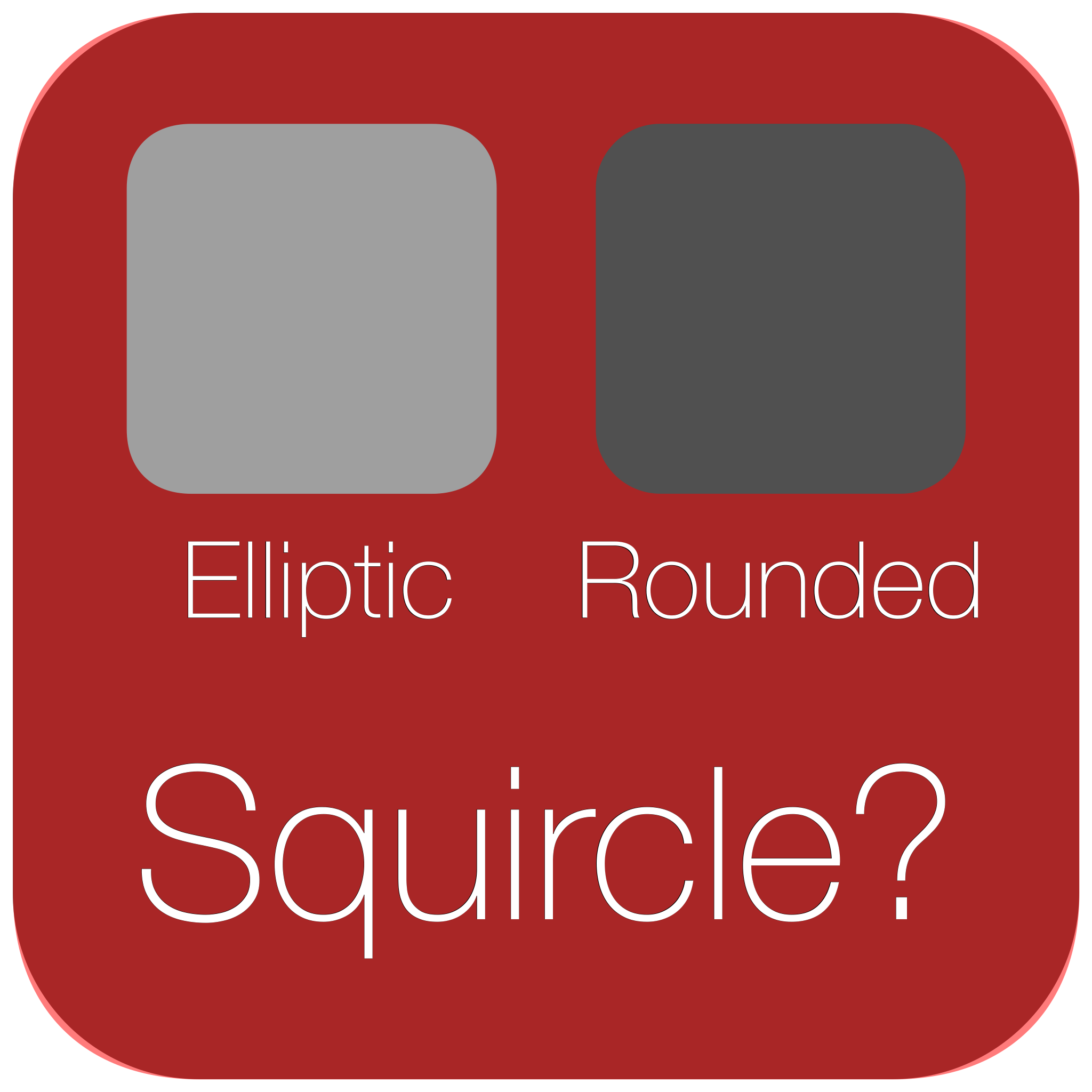

Squircles

5/5/2025

Tired of those boring rounded rectangles? Squircles are the next evolution in smooth, visually appealing shapes, and while perfect execution is tricky, Amadine's does a nice job with them.

Blender Gets Real

3/26/2025

Flow, the Blender-animated film, took home the Oscar for Best Animated Feature. But it's more than just a win for a small team; it's a monumental victory for open-source software and anyone with a vision and a limited budget.

The future's so bright… I want to wear AR glasses

2/4/2025

So much bad news right now… It's all a huge shame, since technology is making incredible strides and it's incredibly exciting. Sure, we don't have Jetsons-style aircars, but here's a list of stuff we do have that's frankly mind-blowing.

Contrary to popular belief, AI may be peaking

1/21/2025

Is artificial intelligence actually getting *smarter*, or just more easily manipulated? This post delves into the surprising ways AI systems can be tricked, revealing a disturbing parallel to the SEO shenanigans of the early 2000s. From generating dodgy medical advice to subtly pushing specific products, the potential for AI to be used for nefarious purposes is not only real but the effects are already visible.

Large Language Models — A Few Truths

1/17/2025

LLMs, like ChatGPT, excel at manipulating language but lack true understanding or reasoning capabilities. While they can produce acceptable responses for tasks with no single correct answer, their lack of real-world experience and understanding can lead to errors. Furthermore, the rapid pace of open-source development, exemplified by projects like Sky-T1-32B-Preview, suggests that the economic value of LLMs may be short-lived, as their capabilities can be replicated and distributed at a fraction of the initial investment.